No, not basically no.

https://mashable.com/article/openai-o3-o4-mini-hallucinate-higher-previous-models

By OpenAI’s own testing, its newest reasoning models, o3 and o4-mini, hallucinate significantly higher than o1.

Stop spreading misinformation. The company itself acknowledges that it hallucinates more than previous models.

Yet again… You fundamentally have the wrong answer…

https://en.wikipedia.org/wiki/GitHub_Copilot

https://github.com/features/copilot

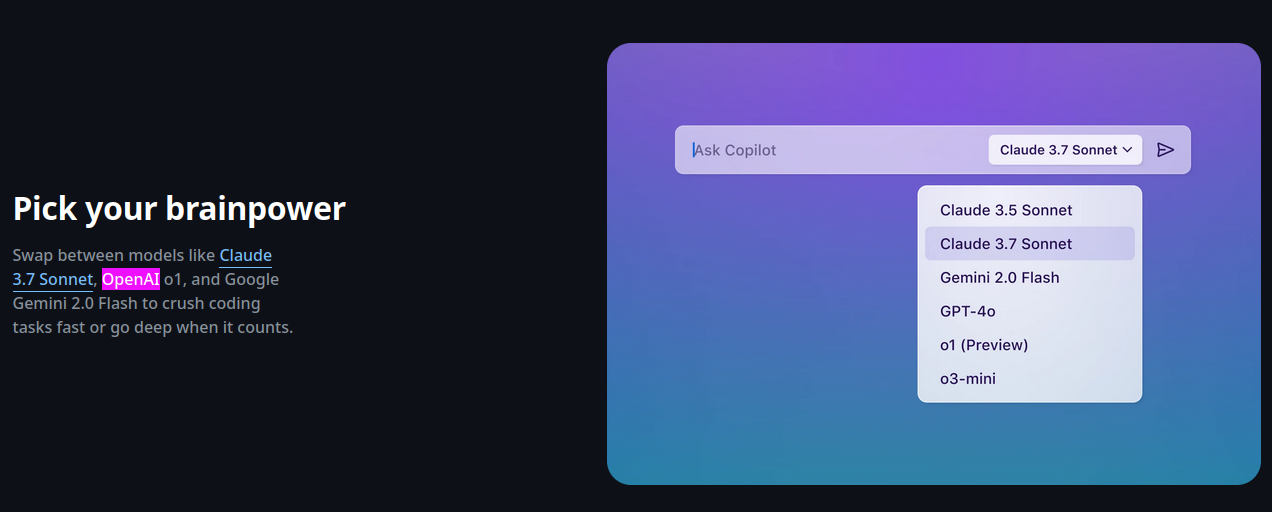

GitHub copilot was literally developed WITH OpenAI the creators of ChatGPT… and you can run o1, o3, o4 directly in there.

https://docs.github.com/en/copilot/using-github-copilot/ai-models/changing-the-ai-model-for-copilot-code-completion

It defaults to 4o mini.